I have good news and bad news. The good news it that I made a lot of progress in retrieving my old blogposts. The bad news is that I don’t think I can finish this today anymore.

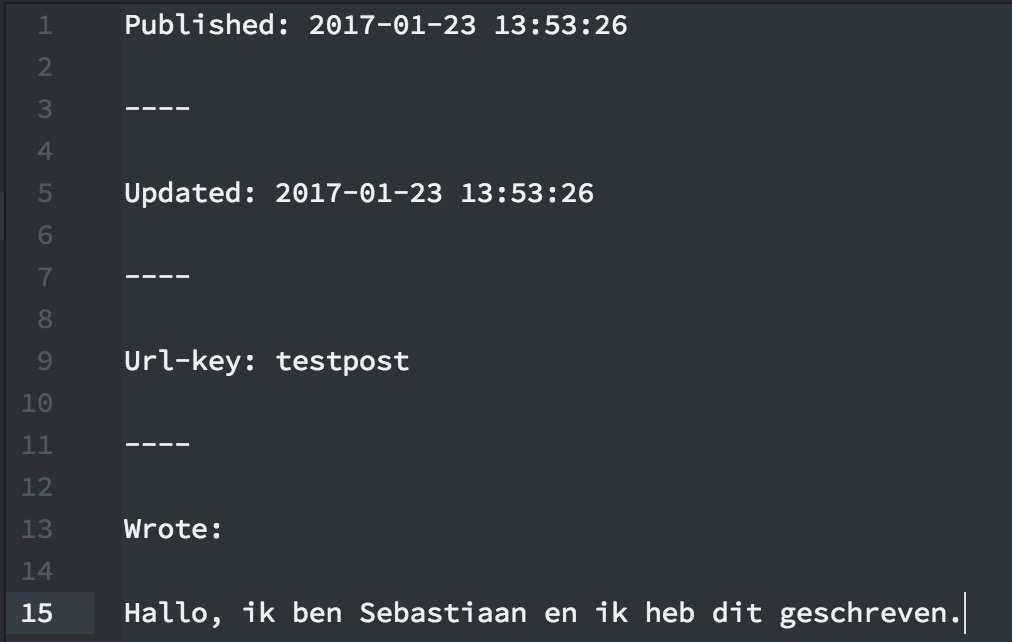

tl;dr: I have my posts in Kirby-formatted .txt-files now, but I need more time to think about moving the images.

In an attempt to own my data, I posted all my Tweets and Instagram-photo’s onto my weblog. To do so, I started fresh, but I never got around to post the old posts back. I have 8000 posts on this site, but the old one’s are all social media reposts, no originals, even though I have had this domain since 2006.

There are roughly four periods between 2006 and now. In the first 5 years, I used a custom made CMS, written by a 16-year-old who taught himself PHP by reading a 175 paged book. (That’s me.) In 2009, I found it too insecure to keep it running that way, so I moved to Wordpress. Looking back at it it wasn’t so insecure at all. Yes, I was 16 years old when I wrote it, but I knew about SQL-injection at the time, and Wordpress isn’t known for it’s security. My own blog at least had security by obscurity.

At the beginning of the Wordpress era I made a fresh start. Luckily I found the old server still running, so my posts should still be there in that database. (You can send a GET request with Host: seblog.nl to my mother’s website to get the last pre-Kirby version.) If not, I have a PDF somewhere, but that’s not great for importing.

After a while I moved away from Wordpress in favor of .txt-files. I did a proper import back then, so that’s where I’m working with now: the .txt-version of my blog.

And those files are great, but hideous. Somehow I thought it was a nice idea to link them together with Javascript, so this version of my blog is not archived by the Web Archive. It worked like an infinite scroll: I had one file called ‘blog.txt’, which only contained a link to the last post. At the end of that post was a link to the next post. I think my 404-page showed a C implementation of the linked list, because that was my inspiration.

Next to ‘blog.txt’ I also had ‘verhalen.txt’ and ‘tekstbeelden.txt’ for other streams of posts. In my import I wrote a nice little recursion to get a list of all the stories, so I can tag them properly:

function findnext($from, $links, &$a) {

if($links[$from]) {

$a[] = $links[$from];

findnext($links[$from], $links, $a);

}

}

$verhalen = [];

findnext('verhalen', $links, $verhalen);

There is also a lot of Markdown-that-isn’t-Markdown in my posts, which complicates things. Oh, and Wordpress-HTML. I’m trying to regex a lot out of it.

The last problem I have now are images. In Wordpress and my own creation I stored all the images in one folder, but in Kirby the convention is to store them in the folder of the page. (Kirby is one big folder structure. I like that.) I need a way to either upload them when I post the posts via Micropub, or a way to identify the location of the newly created posts from the old slug, so I can move the files on the server. I don’t want to do it by hand.

This blogposts gets hopelessly long. Maybe I could’ve fixed my images-problem by now. But I want a clear head before I start importing. And a backup, but with 8000 posts that takes some time. I’ll resume tomorrow. At least I made progress!

Twitter

Twitter Instagram

Instagram LinkedIn

LinkedIn Github

Github Strava

Strava Facebook

Facebook