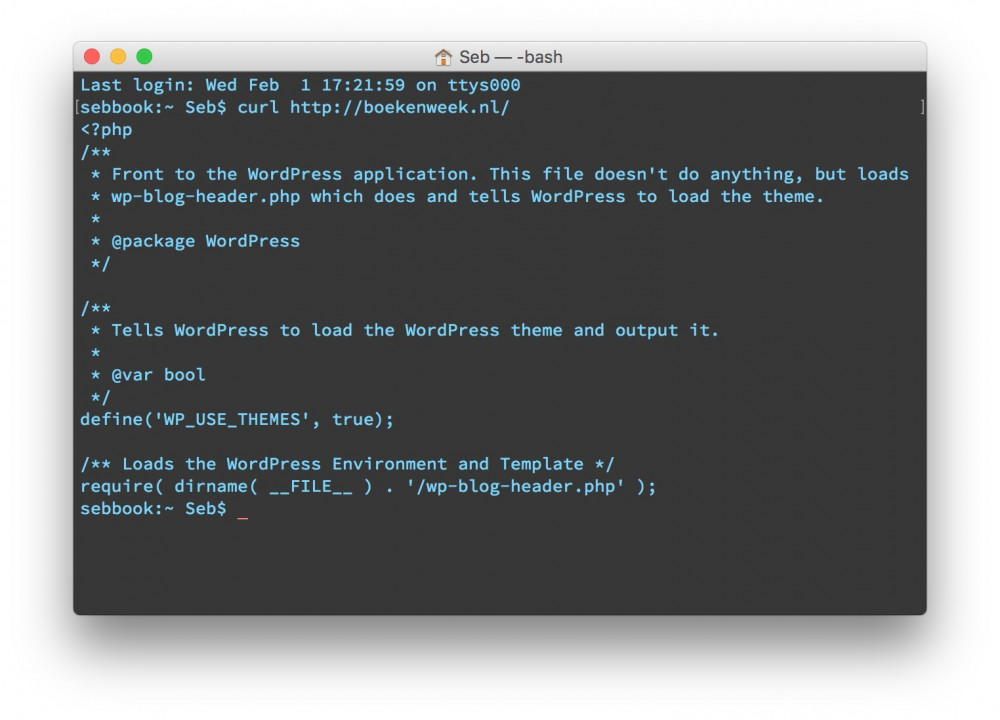

Day 18: Micropub helper class

Today I extended my new Indieweb Toolkit with a Micropub helper class. This allows you to easily send Micropub requests from your PHP code. Here’s an example:

// Set URL and access token of the endpoint to use

micropub::setEndpoint('http://yoursite.com/micropub', 'xxx');

micropub::reply('http://example.com/a-nice-post', "Oh what a post!");

micropub::like('http://example.com/another-post');

micropub::rsvp('http://example.com/an-event', 'maybe');

$newURL = micropub::post([

'name' => 'Custom posts are possible!',

'content' => 'This is a story about (...)',

'category' => ['story', 'custom'],

'mp-slug' => 'custom-posts-are-possible',

'mp-syndicate-to' => 'https://twitter.com/example',

]);

go($newURL);Note that this only creates posts, and relies havily on the server you are making requests to. The mp-syndicate-to in the above example indicates that the remote server needs to post the entry to Twitter also, this helper doesn’t do a thing for that.

A drop-in Micropub endpoint is hard, because every site stores it’s data different, but I intend to extend this toolkit so that the different parts involved will be easier. (De facto making my own code more re-usable.)

Posted this post with use of todays code :D

Twitter

Twitter Instagram

Instagram LinkedIn

LinkedIn Github

Github Strava

Strava Facebook

Facebook