Seb vindt dit leuk.

Seb vindt dit leuk.Alles

Seb vindt dit leuk.

Seb vindt dit leuk.Day 18: Micropub helper class

Today I extended my new Indieweb Toolkit with a Micropub helper class. This allows you to easily send Micropub requests from your PHP code. Here’s an example:

// Set URL and access token of the endpoint to use

micropub::setEndpoint('http://yoursite.com/micropub', 'xxx');

micropub::reply('http://example.com/a-nice-post', "Oh what a post!");

micropub::like('http://example.com/another-post');

micropub::rsvp('http://example.com/an-event', 'maybe');

$newURL = micropub::post([

'name' => 'Custom posts are possible!',

'content' => 'This is a story about (...)',

'category' => ['story', 'custom'],

'mp-slug' => 'custom-posts-are-possible',

'mp-syndicate-to' => 'https://twitter.com/example',

]);

go($newURL);Note that this only creates posts, and relies havily on the server you are making requests to. The mp-syndicate-to in the above example indicates that the remote server needs to post the entry to Twitter also, this helper doesn’t do a thing for that.

A drop-in Micropub endpoint is hard, because every site stores it’s data different, but I intend to extend this toolkit so that the different parts involved will be easier. (De facto making my own code more re-usable.)

Posted this post with use of todays code :D

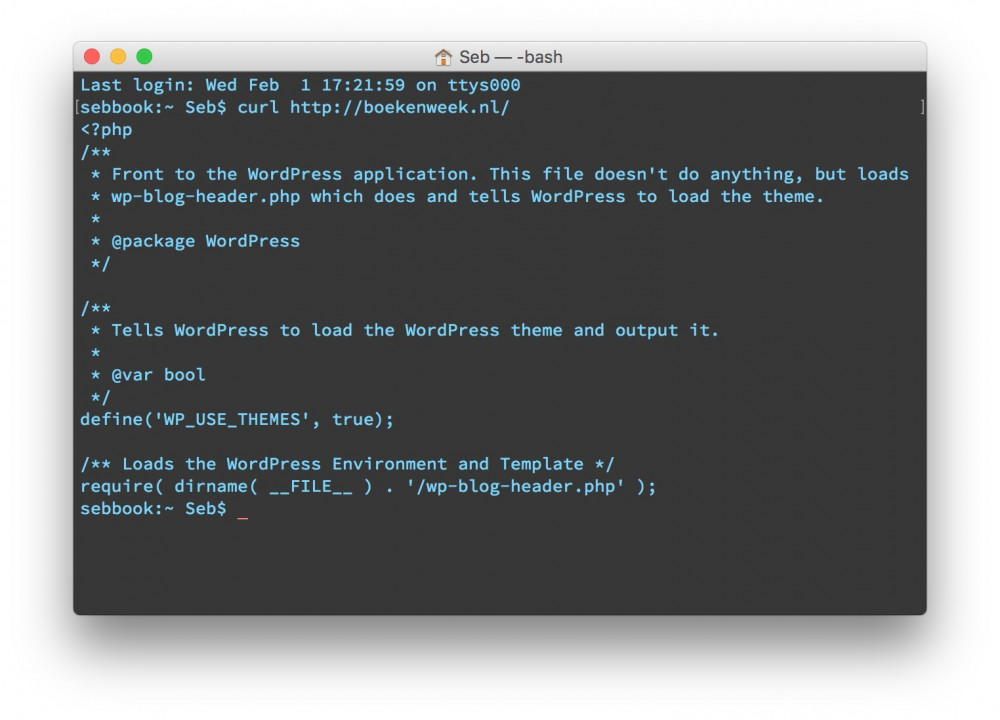

@raymonmens Oh, zo vindingrijk was ik niet, maar het @cpnb doet er wel verstandig aan die te wijzigen ja 😶

Ah, het gaat gewoon allemaal een beetje mis bij het @cpnb...

Wouw. #boekenweek

Day 17: okay, what now?

It’s only Day 17, and while there is allways stuff to do, I feel like I got to a point where everything more or less works like I think it should. To put it in another way: most of my itches have been rubbed.

At the same time, I feel like I have implemented a lot of stuff others have already build in some other form. Apart from my questionable new post type, I have not really build new things. And that’s no problem: nothing is entirely new, all things build on top of other things. My problem with it is that I keep building things other people build before me because they build it. I need to step back and think again: do I need this?

Another problem with the challenge is that I did a lot of ‘small’ things already, but keep seeing only big things on my list. This is both a problem (I keep postponing the big things) and the solution (this challenge forces me to break things down!).

There are a few general areas where I want to make progress that are just a bit to big:

-

Webmentions – I have them working, but I made some changes to the original Kirby plugin that might not be for everyone. I kind of want to start a new basic plugin, providing just sending and receiving Webmentions, possibly including a Panel Widget too (although I don’t use the panel myself). And then offer an extension to that plugin with Microformats parsing and display of comments.

-

Micropub – I wrote a Micropub plugin for Kirby, and it’s only the best out there because it’s the only one out there. I branched off the main branch to do some guild free drafting of the [update](https://www.w3.org/TR/micropub/#h-update) function. My own site uses the latest commit of that branch, the code is horrible and I have never merged it. The problem here is that there is quite a bit of technical debt in that plugin. It needs fixing anyway, although it does work for my site.

-

Importing old data – I’m just postponing on this one because it’s not very spectacular. But I think it’s okay to import some stuff every now and then and call it a day. I still have Strava, Hyves, my old Facebook, old blogposts from before april 2009 and three Vines. The problem here is that my current data is not really clean. I have tweets and blogposts that could be deduped, and the utf-8 conversion for some posts seems to be weird. Cleaning data doesn’t sound like a good thing within this challenge, so I’m postponing importing all together.

-

Private posts – This one might not be as big as the others in terms of work, but is does come in steps. I want to use IndieAuth, but also give Silo-friends a way of seeing posts, via Twitter OAuth and the like. Oh and I might want to write my own Authorization / Token Endpoint for Micropub, but that’s whole other point.

- Multilingual / multi topic – This was an itch for me, but this is one of the things I’ve pretty much fixed for the moment. I have multiple feeds of posts, tags, and thanks to indexing it goes fast as well. I might open different blogs on different domains, at some point, and use them like I use Twitter now: post on Seblog and push the post to those blogs. But that’s not a real itch for the moment. It can wait.

All in all there is enough to do, but none of the above things fit in a ‘today I fixed my X’. I need to start doing ‘today I fixed Y of my X’.

As a start of these things, I made a first version for an Indieweb Toolkit. It’s inspired by and makes use of the Kirby Toolkit. I want to put some basic Indieweb stuff in this thing, so I can re-use it for different projects. At this moment it only consists of a Webmention Endpoint discovery function and a wrapper for the php-mf2 Microformats Parser, but more to come!

Seb vindt een post van Trump Draws leuk.

Seb vindt een post van Trump Draws leuk. Seb heeft dit gebookmarkt.

Seb heeft dit gebookmarkt. Seb heeft dit gebookmarkt.

Seb heeft dit gebookmarkt. Seb heeft dit gebookmarkt.

Seb heeft dit gebookmarkt.Day 16: Backing up my Gmail

With the recent events in the USA, I’ve decided that I want to move away from having data on American servers as much as possible. The first thing I want to tackle in that area is my e-mail. Although I do have an address at seblog.nl, I still just redirect it to Gmail.

My computer is currently in the process of backing up my main Gmail. I documented how I do it on the Indieweb Wiki:

Gmvault seems to be very simple and straight forward. It's on the command line, so it's scary for some users, but it does a good job of describing what it does. I did the following on my Mac, and since I can't remember installing

pip, I think this works out of the box:

sudo pip install --upgrade pipsudo pip install gmvaultgmvault sync example@gmail.com- Gmvault prompts for OAuth, with a description. Press enter to open the browser, and you have to make sure you are logged in at that browser to the Gmail account.

- Do the OAuth in de browser and copy the key. Paste it in the Terminal

- Gmvault does things! I got 6351 mails out of an old account in 16m 14s. It creates a folder called 'gmvault-db' in your home folder, with (in /db/) folders for every month. In those folders are, per e-mail, an '[id].meta' and an '[id].eml.gz'. The .meta is a JSON with info from Gmail (labels/tags, subject) and the .eml.gz is a gzipped .eml, which is just the plain-text e-mail with all the headers.

Having the data is just one step. I will need to think about how I want to manage my e-mail in the future. For now I’m on Gmail still, but I am making plans.

To make today a bit more IndieWeb-relevant (e-mail is not web), I backed Micro.blog, because today is the last day of their Kickstarter campaign.

Although I really like that the project gives Indieweb a lot of attention, it felt wrong to only give to Micro.blog. So I also backed Aaron Parecki with the same amount, for his ongoing 100daysofindieweb. I use a lot of things he made or did first.

Seb heeft How to hack the upcoming Dutch elections van Sijmen Ruwhof gebookmarkt.

Seb heeft How to hack the upcoming Dutch elections van Sijmen Ruwhof gebookmarkt.I really like XRays way of storing things (flat, knowing which properties will be string or array, and the rels list), and I wanted to use it with my reader. Having it as a library with #17 would be nice for that, but parsing the whole h-feed at once is also very handy.

So, allow me to brainstorm / share some h-feed stuff I learned with sebsel/lees :)

-

I agree: the feed does not have to have an author. It can be used to define the author of the posts, but the feed itself can be authorless. Example of a mixed h-feed is Twitter > HTML through Granary.

-

Different ways of presenting:

- have a

childrenproperty with an array[]of objects{}that are just what you get indatawhen you look at a h-entry - have a

childrenproperty with an array[]of urls, and put the h-entry objects inrefs

- have a

-

Some h-feeds have no

u-urlfor each h-entry. This makes it impossible to do 2. in those cases. But it might not be XRays task to fix that.

(Same fordt-published. A lot of WP sites havehentryclass with sometimes aarticle-nameclass, but dropped the rest of the Mf1. Again: might not be XRays task to fix that.) -

what to do with

h-eventandh-reviewwithin theh-feed? -> have to detect type for each child. -

when looking at a home page (i.e. aaronparecki.com), I get a card, which is great because that's the main object on that page. But when using XRay in a /reader, I want a feed. Would be nice to have a

type=feedparameter, to point XRay to the type of data you are looking for. - Once you go h-feed, should you go RSS/Atom?

Seb vindt een foto van Lisa Weeda leuk.

Seb vindt een foto van Lisa Weeda leuk.Day 15: hacked my own site

Today I hacked my own site. I don't want to give details now, because it's late and it needs a proper write-up, but I will soon. It is fixed now. This post gets updated with a link to a more detailed article when it’s there.

When can one officially put ‘hacker’ in one’s Twitter bio? I think I’m close.

Update: I wrote the post! It's here.

Tussen het papier digitale dingen doen bij De Gids.

Seb vindt een foto van Roy Santiago leuk.

Seb vindt een foto van Roy Santiago leuk. Seb heeft Stop Using Arial & Helvetica van King Sidharth gebookmarkt.

Seb heeft Stop Using Arial & Helvetica van King Sidharth gebookmarkt. Seb heeft Lossless Web Navigation with Trails van Patryk Adas gebookmarkt.

Seb heeft Lossless Web Navigation with Trails van Patryk Adas gebookmarkt.Day 14: XRay like-lookup

I’ve been posting likes to Seblog for a few weeks now. I like the likes, but they al looked like ‘Seb likes this’, so I was like: looks like I like to fix my likes.

Today I hooked up XRay to Seblog, so now my site can see things on URLs I link to. (I actually run a version on a ’secret’ URL, to keep things on my own server.) This way I can grab the name of the post I liked and show that, together with the author. If it has no name, but a photo, it says ‘Seb likes a photo’. It adds the author if it knows who it is. If it really doesn’t know anything about the page, it still defaults to ‘this’. I can fix those manual if I want.

XRay’s format consists of two parts: a data part, with information about the page it looked at, and a refs part, which is a list of URLs that are mentioned or embedded on the page. Retweets, for example, show the original tweet in the refs. I added a refs field to my pages, where I store the data part under the url of the page I mentioned and the refs part under the urls they came with.

An example, with Kirby Data and YAML:

Like-of: http://example.com/a-post

----

Refs:

"http://example.com/a-post":

name: A Post

author: Someone

repost-of: http://another-example.com/a-photo

"http://another-example.com/a-photo":

photo: http://another-example.com/a-photo/img.jpg

author: Someone ElseI can be more efficient with the moment I grab the data. For example: to send webmentions, my server already reaches out to all links in the post to find their Webmention Endpoint. If I parse the page then, I don’t need another call from XRay later on.

I also need to fiddle a bit with the things I want to display. But for now it’s okay: my server has the data for my likes. I even download pictures if a post has a photo property. I now not only own my likes, I also have my own archive of the things I like.

Seb vindt een foto van Daniël van der Meer leuk.

Seb vindt een foto van Daniël van der Meer leuk.

Twitter

Twitter Instagram

Instagram LinkedIn

LinkedIn Github

Github Strava

Strava Facebook

Facebook